Project Details

Project Description

Quantizing large language models has become a standard way to reduce their memory and computational costs. Typically, existing methods focus on breaking down the problem into individual layer-wise sub-problems, and minimizing per-layer error, measured via various metrics.

Vladimir Malinovskii, Denis Mazur, Ivan Ilin, Denis Kuznedelev, Konstantin Burlachenko, Kai Yi, Dan Alistarh, and Peter Richtárik

PV-Tuning: Beyond straight-through estimation for extreme LLM compression

Advances in Neural Information Processing Systems 38 (NeurIPS 2024)

Oral at NeurIPS 2024 (0.4\% acceptance rate)

Vladimir Malinovskii, Andrei Panferov, Ivan Ilin, Han Guo, Peter Richtárik, and Dan Alistarh

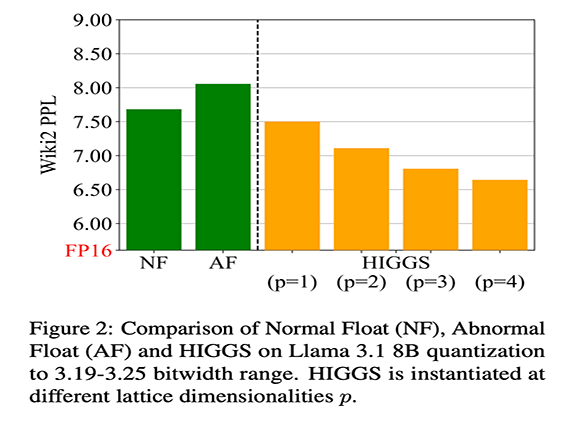

Pushing the limits of large language model quantization via the linearity theorem

arXiv:2411.17525

2024

About the Researcher

Affiliations

Education Profile

- PhD, Operations Research, Cornell University, 2007

- MS, Operations Research, Cornell University, 2006

- Mgr, Mathematics, Comenius University, 2001

- Bc, Management Comenius University, 2001

- Bc, Mathematics, Comenius University, 2000

Research Interests

Prof. Richtarik's research interests lie at the intersection of mathematics, computer science, machine learning, optimization, numerical linear algebra, high performance computing and applied probability. He is interested in developing zero, first, and second-order algorithms for convex and nonconvex optimization problems described by big data, with a particular focus on randomized, parallel and distributed methods. He is the co-inventor of federated learning, a Google platform for machine learning on mobile devices preserving privacy of users' data.Selected Publications

- R. M. Gower, D. Goldfarb and P. Richtarik. Stochastic block BFGS: squeezing more curvature out of data, Proceedings of The 33rd International Conference on Machine Learning, pp. 1869-1878, 2016

- J. Konecny, J. Liu, P. Richtarik and M. Takac. Mini-batch semi-stochastic gradient descent in the proximal setting, IEEE Journal of Selected Topics in Signal Processing 10(2), 242a-255, 2016

- P. Richtarik and M. Takac. Parallel coordinate descent methods for big data optimization Mathematical Programming 156(1):433a-484, 2016

- R. M. Gower and P. Richtarik. Randomized iterative methods for linear systems, SIAM Journal on Matrix Analysis and Applications 36(4):1660-1690, 2015

- O. Fercoq and P. Richtarik. Accelerated, parallel and proximal coordinate descent. SIAM Journal on Optimization 25(4):1997a-2023, 2015

Desired Project Deliverables

Recommended Student Background

We are shaping the

World of Research

Be part of the journey with VSRP